Unless you work daily in the streaming business, it’s sometimes hard to get into the nuances of technologies, and what the impact is for your long term strategy. HLS and HDS are both HTTP based streaming protocols, and sound very similar, but are fundamentally very different.

HLS stands for HTTP Live Streaming and is Apple’s proprietary streaming format based on MPEG2-TS. It’s popular since it provides the only way to deliver advanced streaming to iOS devices. It often mistakenly gets defined as HTML5 streaming, but is not part of HTML5.

Apple has documented HTTP Live Streaming as an Internet-Draft (Individual Submission), the first stage in the process of submitting it to the IETF as an Informational Standard. However, while Apple has submitted occasional minor updates to the draft, no additional steps appear to have been taken towards IETF standardization [Wikipedia].

HDS stands for HTTP Dynamic Streaming and is Adobe’s format to deliver fragmented mp4 files (fMP4). HLS uses MPEG-2 Part 1, while HDS uses MPEG-4 Part 14 and Part 12.

Both formats are MPEG-based, so why should you care? Adobe, Microsoft and Transitions wrote an interesting white paper highlighting the advantages of fMP4 (HDS) over MPEG2-TS (HLS).

Separating Content and Metadata

With MP4 files, metadata can be stored separately from audio and video content (or “media data”). Conversely, M2TS multiplexes (combines) multiple elementary streams along with header/metadata information […] This separation of content and metadata allows specification of a format using movie fragments optimized specifically for adaptive switching between different media data, whether that media data is varying video qualities, multiple camera angles, different language tracks or even different captioning or time-texted data.

All crucial features – multiple camera angles for interactive TV/live sports, language tracks and especially effective captioning support with the upcoming government requirements.

Independent Track Storage

In fragmented MP4 (fMP4), elementary streams are not multiplexed and are independently stored separate from metadata, as noted above. As such, fMP4 file storage is location agnostic and can store audio- or video-only elementary streams in separate locations that are addressable from XML-based manifest files.

A must have feature, especially for large media libraries like the one from Netflix:

This plethora of combinations can quickly become unwieldy, with upwards of 4000 possible transport streams combinations – a multiple far above the 40 audio, video and subtitle tracks required for the DVD example. Netflix® estimates using an M2TS / HLS approach for their library could result in several billion assets, due to combinatorial complexity—a situation they avoid by using an fMP4 elementary stream approach, by selecting and combining tracks on the player.

Trick-play modes

Trick-play modes—such as fast-forward or reverse, slow motion, and random access to chapter points—can be accomplished via intelligent use of metadata.

A bit more optional, but certainly useful.

Backwards compatibility to MPEG-2 Transport Streams

Separating timing information from elementary video and audio streams allows conversion from several fragmented MP4 elementary streams into a multiplexed M2TS.

HLS is similar to what FLV with ON2 VP6 was before Adobe endorsed H.264 – a risky dead-end format for media libraries.

Seamless stream splicing

Fragmented MP4 audio or video elementary streams can be requested separately because each movie fragment only contains one media type.[…] Using independent sync points for media types along with simple concatenation splicing yields shortened segment lengths: M2TS segment lengths are typically ten seconds, limiting frequency of switching and require extra bandwidth and processing. Segment lengths for fMP4 can be as low as 1 or 2 seconds as they don’t consume more bandwidth or require extra processing.

Absolutely crucial for live sports – it means the difference between close to real-time (TV broadcast), and tens of seconds delayed.

Integrated Digital Rights Management (DRM)

Inherent to the MPEG-4 specification, digital rights and—if necessary—encryption, can both be applied at the packet level. In addition, the emerging MPEG Common Encryption (ISO/IEC 23001-7 CENC) scheme can be combined with MPEG-4 Part 12 to enable DRM and elementary stream encryption.

Without DRM no premium content.

Caching efficiencies

When considering comparative complexity, noted in the sidebar above, it’s also worth considering the effect that all the possible combinations has on cache efficiency. Each track and bitrate combination required for M2TS delivery means a higher likelihood that an edge cache will not have the particular combination, resulting in a request back to the origin server.

Important for HD content and the increasing traffic volume. It also directly saves delivery costs.

Bandwidth efficiencies in segment alignment

A key factor when generating multiple files at different bitrates is segment alignment. HTTP adaptive streams via fMP4 relies on movie fragments each being aligned to exactly the same keyframe, whether for on-demand or live streaming (live requires simultaneous encoding of all bitrates to guarantee time alignment). A packaging tool that is fragment-alignment aware maintains elementary stream alignment for previously recorded content.

Same as above, the efficiencies reduce bandwidth, increase user experience and battery life.

And what does this mean for open standards? It’s certainly quite a while away, especially since codec licensing costs are making HTML5 codec standardization very challenging, but the most promising initiative is MPEG-Dash.

MPEG-DASH. MPEG, the standards body responsible for MPEG-2 and MPEG-4, is addressing dynamic adaptive streaming over HTTP (MPEG-DASH) through the use of four key profiles—two around CFF for fMP4 and two for MPEG-2 TS. For fMP4 both the live (ISO LIVE) and on-demand (ISO ON DEMAND) profiles reference Common Encryption (CENC or ISO 23001-7). ISO LIVE, a superset of live and on-demand profiles, is close to Microsoft Smooth Streaming, meaning Microsoft could easily update its server and client viewers to meet DASH compliance.

(01/03/2012 – Update) In addition, when comparing the two different approaches, it’s important to understand the following:

- TV is moving to “the web”. That means watching more content in traditional online venues (browsers, mobile apps, etc) and also the development of “hybrid” TV models where MVPDs utilize web/OTT TV technologies to send broadcast TV to connected TVs as well as browsers and mobile devices

- While MPEG2 is “the” technology stack for legacy IPTV, the stack that will be used for this new OTT/Web/Hybrid model is still being determined. This is why the whitepaper is interesting.

- One thing that is clear is that HTTP streaming will be the backbone of the stack. This is why it’s important that the container chosen for this new model work well in the context of HTTP streaming. How well it works for legacy streaming is not relevant.

Conclusion

HDS (fMP4) has some key advantages over HLS, and is recognized by the industry as the better future solution.

- Reduces delivery costs

- Supports more features, most of them critical

- Better user experience

- Future safe format

iOS does not support fMP4 yet and it’s hard to predict Apple’s strategy, but with fMP4’s full backward compatibility to HLS, and the fact that media servers like Flash Media Server support both HDS and HLS with one workflow, fMP4 is the best investment for your future media delivery strategy.

There are a number of things which I would disagree with:

"All crucial features – multiple camera angles for interactive TV/live sports, language tracks and especially effective captioning support with the upcoming government requirements."

Erm…captioning support is also easily available with MPEG-TS (and are in fact unrelated to the container in this case); captions are delivered to televisions after all.

"Independent Track Storage"

MPEG-TS is far more flexible here – you can do a lot of remuxing operations continuously, whereas with MP4 you could only process one segment at a time. A proper implementation would just cache a full transport stream and the web server could separate packets and send only the appropriate PIDs to the user.

"Backwards compatibility to MPEG-2 Transport Streams"

Strictly speaking this isn't quite true for strict spec compliance (the kind you would need for delivery to set top boxes). I won't go into the details.

"Absolutely crucial for live sports – it means the difference between close to real-time (TV broadcast), and tens of seconds delayed."

MPEG-TS has a well-defined buffering model which allows playback devices to start as soon as the stream is ready. MP4 was originally designed as a storage format and so playback devices basically just attempt to guess the amount to buffer which increases playback start times.

"Integrated Digital Rights Management (DRM)"

MPEG-TS is also designed around encryption and the number of MPEG-TS CA deployments are orders of magnitude larger than any MP4 CA deployments.

There are tons of other things in the White Paper which imply MP4 can do things MPEG-TS can't.

At the end of the day you are trying to push a storage format into a realm it was not designed for.

Of course in the interests of balance the BIG features for MP4 are lower overhead and HTML5 support. Owing to patents MPEG-TS will not be royalty free for a while.

For broadband CC delivery, I would look into implementation details for standards like the more complex SMPTE-TT, rather than the broadcast model – https://www.smpte.org/sites/default/files/st2052-…. I do agree though, it doesn't have to be embedded in the actual media though.

I'm not sure what you mean with MPEG-TS CA, but overall encrypted HLS is not DRM in any way, and easy to circumvent. In some cases those limitations might be part of iOS, not the format. Both Flash Access and PlayReady take fully advantage of fMP4.

I'll get back to you on the other topics, since they might need a longer response.

Overall I don't think the purpose of this is to say that HLS is not a good solution – it's the only one for iOS and a lot of devices today – but if you want to invest in a future format, it should be fMP4 rather than MPEG2-TS due to the architectural structure.

Kieran, a couple more comments:

————–

“Independent Track Storage”

MPEG-TS is far more flexible here – you can do a lot of remuxing operations continuously, whereas with MP4 you could only process one segment at a time. A proper implementation would just cache a full transport stream and the web server could separate packets and send only the appropriate PIDs to the user.

[Jens] MPEG2-TS is a muxed format. You can’t send the audio, video, etc. independently and then mux them at the client. A processor/tool can’t operate on just the audio or just the video efficiently, since they have to read all of the data to get a single track.

————–

“Backwards compatibility to MPEG-2 Transport Streams”

Strictly speaking this isn’t quite true for strict spec compliance (the kind you would need for delivery to set top boxes). I won’t go into the details.

[Jens] You might have misinterpreted this, it mostly means that the information in the MP4 file tends to be a superset of what is in the TS, meaning that it is straightforward to create a valid TS from an MP4, but lots of information gets dropped when going the other way.

————–

“Absolutely crucial for live sports – it means the difference between close to real-time (TV broadcast), and tens of seconds delayed”

MPEG-TS has a well-defined buffering model which allows playback devices to start as soon as the stream is ready. MP4 was originally designed as a storage format and so playback devices basically just attempt to guess the amount to buffer which increases playback start times.

[Jens] This is more of a criticism of the use of TS streams for HTTP streaming then for traditional TS streaming. If we were talking about streaming the criticism is true, but for HTTP streaming the “archive style” format is easier to deal with and allows the HTTP streaming client to make assumptions that enable low latency HTTP streaming. It is possible you could get this from a TS stream, but you would need to place lots of non-standard restrictions on the TS. In fMp4 you get this for free.

————–

“Integrated Digital Rights Management (DRM)”

MPEG-TS is also designed around encryption and the number of MPEG-TS CA deployments are orders of magnitude larger than any MP4 CA deployments.

[Jens] CA != DRM. There is no standard way for a modern DRM system to protect a TS stream. There is a standard way (MPEG CEF) to DRM protect an MP4 file. Please don’t confuse the use of MPEG2-TS in a legacy TV setting rather than its use in a modern OTT/Web/Hybrid TV model based on HTTP streaming.

————–

There are tons of other things in the White Paper which imply MP4 can do things MPEG-TS can’t.

At the end of the day you are trying to push a storage format into a realm it was not designed for.

[Jens] Neither MPEG2-TS or fMP4 were “designed” for OTT/Web/Hybrid TV. In this sense, both are being proposed as a possible way to implement Web TV using HTTP streaming. This whitepaper notes a significant number of reasons that fMP4 is better for this purpose. HTTP streaming (purposely) blurs the line between “streaming” and “storage” to work with HTTP, a protocol built around stored cacheable objects.

————–

Of course in the interests of balance the BIG features for MP4 are lower overhead and HTML5 support. Owing to patents MPEG-TS will not be royalty free for a while.

[Jens] Agreed.

"MPEG2-TS is a muxed format. You can’t send the audio, video, etc. independently and then mux them at the client."

The server can drop a particular TS stream using PID filtering, for example if a stream had English, French and German tracks, the user could request only the English track and the server could filter out any TS packets with the French and German PIDs whilst at the same time caching only one copy of the stream. It's an inherent part of MPEG-TS whereas with MP4 you have to artificially split the tracks up.

Or if you do require separation of audio tracks (lets say you have three languages and ten video bitrates/resolutions) the server can trivially multiplex each audio/video/subtitle stream into an MPEG-TS since the MPEG-TS packets are already in the correct form. The equivalent work to do this for MP4 is much greater which is one reason why reconstruction is done at the player side.

"meaning that it is straightforward to create a valid TS from an MP4,"

Timing information from the MPEG-TS is lost when going to MP4, it cannot be recovered exactly – virtually all implementations do this through guesswork which in some cases may not work with picky playback devices (i.e set top boxes). For example there is information in MPEG-TS that says buffer for 0.3 seconds, in MP4 there is no such information (the way its done is to make the segment sizes reflect the buffer time) and the player has to guess what these values are. FWIW HTML5 originally did not have a system to specify buffering times, though flash did.

" It is possible you could get this from a TS stream, but you would need to place lots of non-standard restrictions on the TS."

You don't have to put anything non-standard in the TS because a playback device would just use the PCR of the MPEG-TS to know when to start playing so that its internal buffers don't underflow. I think the point you're trying to make is that the playback device has to wait for the whole TS segment before starting and these segments can be quite long. In fact with MPEG-TS a playback device can start playing before the whole segment is received so there's no drawback in that sense. Anyway my main assertion that latency is no different with MPEG-TS still stands.

" CA != DRM. There is no standard way for a modern DRM system to protect a TS stream"

I agree that CA != DRM but this is a semantic point. CA technology can be used to provide DRM and vice versa. Vendors are well versed in providing systems that do DRM/CA and if MPEG-TS for HLS is successful, such products will appear.

"Neither MPEG2-TS or fMP4 were “designed” for OTT/Web/Hybrid TV."

I also agree but MPEG-TS is closer to the goals of OTT/Web/Hybrid.

Hi Kieran, thanks for posting your thoughts. I've made a couple of comments below:

"The server can drop a particular TS stream using PID filtering"

[KS] A "smart origin" could certainly do that, but the nice things about fMP4 is that you could do the same thing using just byte-range requests to a regular HTTP server. This makes delivering VOD content through a CDN much more efficient, too, since many CDN's edge cache will pull the entire VOD asset to the edge of the network at one time.

"You don’t have to put anything non-standard in the TS because a playback device would just use the PCR of the MPEG-TS to know when to start playing so that its internal buffers don’t underflow."

[KS] True, but that operation requires buffering. I think Jens' point here is that in fMP4 all the samples are time aligned, no buffering required to ensure proper muxing at the client. You could enforce time ordering in a TS, but that again is non-standard. I concede this is a minor point, but not having to deal with additional muxing at the client allows for faster startup times, so having it built into the container is nice.

” CA != DRM. There is no standard way for a modern DRM system to protect a TS stream”

I agree that CA != DRM but this is a semantic point. CA technology can be used to provide DRM and vice versa. Vendors are well versed in providing systems that do DRM/CA and if MPEG-TS for HLS is successful, such products will appear.

[KS] In this case I believe Jens is referring to *existing* MPEG-TS content protection schemes as "CA". True that vendors will build DRM systems on top of whatever technology distribution succeeds at market, but if you need to point your customers to a solution today then your only choice is CENF, which works with fMP4.

“Absolutely crucial for live sports – it means the difference between close to real-time (TV broadcast), and tens of seconds delayed”

MPEG-TS has a well-defined buffering model which allows playback devices to start as soon as the stream is ready. MP4 was originally designed as a storage format and so playback devices basically just attempt to guess the amount to buffer which increases playback start times.

[Nitin] Though it seems so, but not quite useful in case of HTTP streaming. Had it been a byte streaming case with a single rendition of a stream, I might support your point. But with HTTP streaming where your are ought to get segment by segment, fMp4 segment might be quite useful as compared to MPEG-TS segment for purpose of like seeking within the segment, switching to another renditions (different bitrate stream) of same stream.

There would be quite substantial reasons why HLS format recommend clients to bears with 3* MPEG-TS segment size latency even if it could as per your point do instant playback as soon as bytes were available.

I suppose no body would doubt that Mp4 had been excellent storage format.. Reason being it gives you flexibility to extract out as much info as possible with most optimal algorithmic techniques.. With streaming techniques like HTTP which is quite actually downloads of small piece of segments ( unlike RTMP or other byte streaming format), a mp4 segments may be much more useful than a MPEG-TS segment. Image if you have maximum info within a HTTP segment that you can extract in highly optimal manner at client side, what all nice things you can achieve with HTTP streaming.. So as Jens said it it future bet..

Hi,

i think there are some mistakes in your article. HLS is not proprietary instead of HDS. The HLS specificatinos are complete documented in a draft release 7:

http://tools.ietf.org/html/draft-pantos-http-live…

HDS is an good example that adobe says it is open and in reallty its complety closed.

For HLS you dont need an streamingserver, for HDS you need an FMS Server.

Why dont adobe implement HLS for flash?

We implemented it for flash as a OSMF plugin, for silverlight and many other devices.

A demo is here:

http://onlinelib.de/vcs-multiplexer.html

Another false statement is that the HLS segments has to be 10 seconds, this is only recommend, but not neassary. In our HLS demo we create 3 Seconds segments without any problem. For live we create dynamic m3u8 files with 3 x 1 seconds segments and in the next rotation the segments are 5 seconds. This is what you also cant do with HDS.

DRM?

Flash Access has nothing toDo with real DRM, the static master keys are include inside the license module that the flash player loads and installed on the client. You also can very simple copy the license files to another client and correct the simple hash calculation. Its the somthing simliar as by rtmp(e). Thats one of the reason why amazon changes from flash access to silverlight play ready.

The good thing is that adobe open the netstream object, here you can simply add any encryption you like, but dont if you use adobe flash hls packager 🙂 they forbit you to add our own encryption technology when you use the adobe packager to generate HDS streams.

Maybe you compare also this write something about this..

The new iOS 5 has a byte-range mode which allows to load segments in one session without disconnecting. That is HDS is not supports. This feature is important cause you reduce the load on the server or CDN.

MpegTS is a industry standard, HDS is not.

Let me know what you think..

Gary

http://onlinelib.de

In terms of openness, HDS and Smooth Streaming are both just implementations of fMP4, which is open as defined by mpeg-4. Sorry this wasn’t clear.

Here are the file specs of F4V/FLV (HDS):

http://www.adobe.com/devnet/f4v.html

HLS sometimes get referred to as HTML5 streaming, which is wrong – it is not part of HTML5, but an IETF draft in Informational state pending for years.

HLS recommends 10 seconds, and yes, it can be shorter, but by design lower latency is easier to achieve with fMP4. Flash Access is a full high-end DRM, and has nothing to do with RTMPe. (here are some references – http://blogs.adobe.com/flashmedia/2011/03/flash-a…

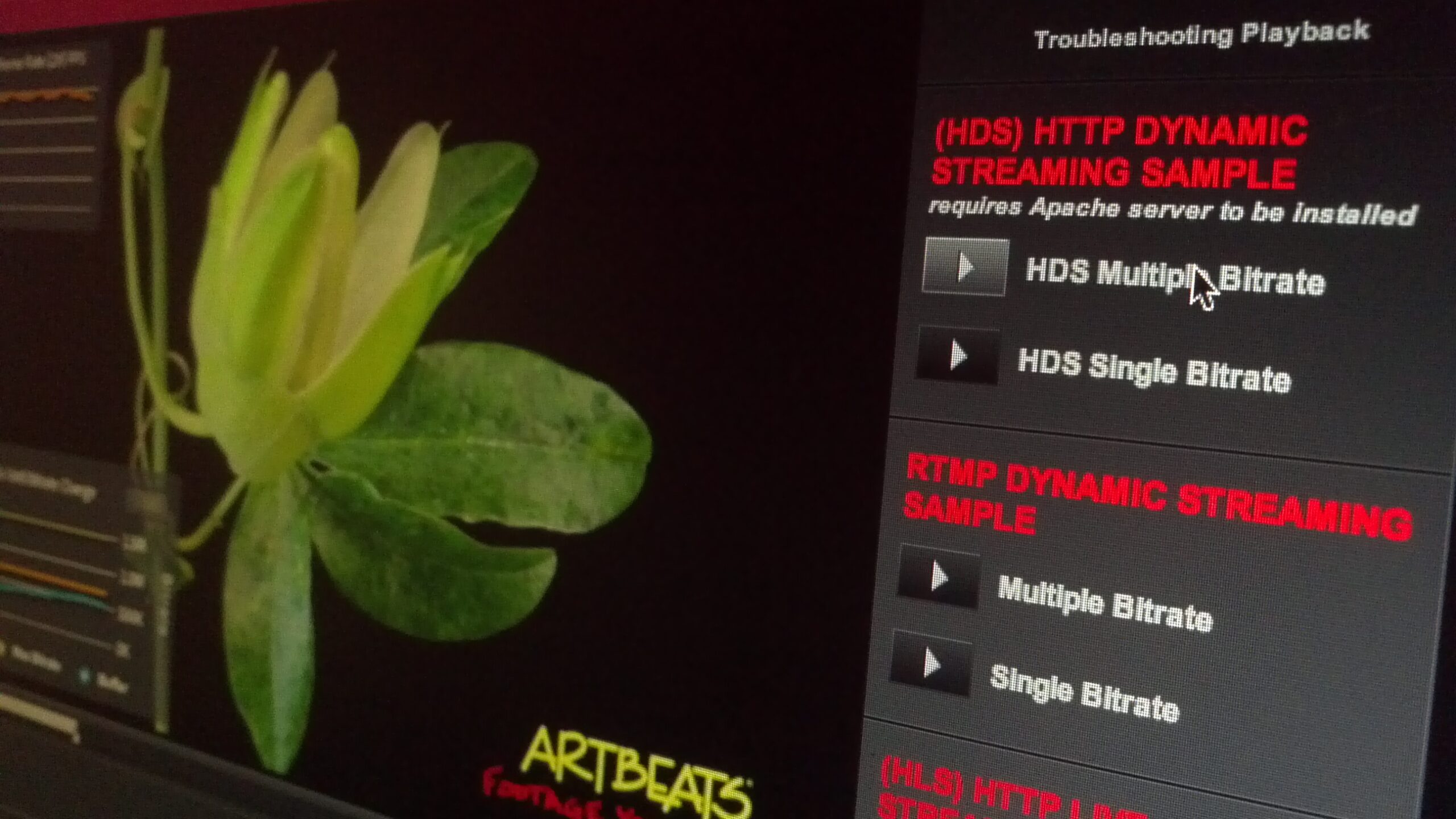

You don’t need an FMS server for HDS delivery, but an origin with the free HDS Apache module and regular HTTP caches.

Yes, a lot you can do with HLS, but it’s unnecessarily limiting key functionality, and mpeg-dash in my opinion is heading into the right direction supporting both fMP4 and MPEG2-TS.

For HLS you dont need an streamingserver, for HDS you need an FMS Server.

[Nitin] This is interesting, but I would like to put it in a different manner as per my personal experience..

HLS is more of a "create-consume" format while HDS apart from "create-consume" is also "state-based-interactive-delivery" format, where "state-info" or "information from which states can be derived" can be easily transferred between the client and server. HDS allows server to store all the information in very optimal storage format and client today or may be in future can nicely play with such information.. So i would say its an extra advantage with HDS that you can deploy server, which is extra overhead with HLS because of its delivery nature..

@ Gary In terms of openness, HDS and Smooth Streaming are both just implementations of fMP4, which is open as defined by mpeg-4. Sorry this wasn't clear.

Here are the file specs of F4V/FLV (HDS):

http://www.adobe.com/devnet/f4v.html

HLS sometimes get referred to as HTML5 streaming, which is wrong – it is not part of HTML5, but an IETF draft in Informational state pending for years.

HLS recommends 10 seconds, and yes, it can be shorter, but by design lower latency is easier to achieve with fMP4. Flash Access is a full high-end DRM, and has nothing to do with RTMPe. (here are some references – http://blogs.adobe.com/flashmedia/2011/03/flash-a…

You don't need an FMS server for HDS delivery, but an origin with the free HDS Apache module and regular HTTP caches.

Yes, a lot you can do with HLS, but it's unnecessarily limiting key functionality, and mpeg-dash in my opinion is heading into the right direction supporting both fMP4 and MPEG2-TS.

hi jens,

you are right that you need for VOD only the apache module, but for live you need at least an FMS.

–

HLS sometimes get referred to as HTML5 streaming, which is wrong

–

Thats also right. Currently HTML5 has no streaming standard and sometimes i red that html5 and mp4 is the streaming standard, which has nothing toDO with streaming we know from classic methods like rtmp,rtsp..etc..I think apple wants to bring HLS to an html5 standard. Thats why we see the draft version 7 of hls.

As far as i heard mpeg dash requires license fee, or? Do you know more about this?

I tried to parse some fragments into flash, and it seems also very easy to add mpegDash for flash and from flash over AIR to android smartphones and tablets.

The problem i see is that apple maybe don't bring mpeg dash to iOS, but its important cause we have over 200 millions iOS devices out there.

Let see what the future of streaming will bring to us.

I wish all a great year 2012…

Gary

Keep in mind mp4 has MPEG-LA licenses for commercial use, which makes it very hard to become a HTML5 standard (and as i mentioned before, Chrome decided to drop mp4 (h.264) in favor of WebM)

http://en.wikipedia.org/wiki/H.264/MPEG-4_AVC

On August 26, 2010 MPEG LA announced that H.264 encoded internet video that is free to end users will never be charged for royalties.[10] All other royalties will remain in place such as the royalties for products that decode and encode H.264 video.[11] The license terms are updated in 5-year blocks.[12]

MPEG-DASH in the current state supports both fMP4 and MPEG-TS.

Ha, one of the biggest differences I see is that while MS and Adobe seem to be very actively "evangelizing" against HLS, sponsoring critical papers and having employees write articles like this, Apple are not; they've just published the spec and left it at that.

Seriously the main technical advantage of HDS over HLS is the stream separation – which is most important if you're looking to efficiently stream video in multiple languages. The rest of the points are almost irrelevant or wrong (i.e. Caching – HDS has advantage over HLS how exactly?)

The one thing I really don't like about HDS is its requirements of the origin, the heart of a streaming delivery network.

Origin is /required/ to run Apache, /required/ to run (closed-source) Adobe plugin, and for every fragment request, needs to extract fragments from segment files. That's every request coming in to any edge server, which has not cached the fragment (depending on how deep your caches are, or how optimistic you are about repeat requests, potentially very sub-optimal).

I did some benchmarking and posted it here: http://rdkls.blogspot.com/2011/12/benchmarking-ad…

Basically on my test machine (granted not a beast) the HDS module started falling over at about 500 concurrent requests, whereas straight Apache still had 99% availability (or nginx, which you can use for HLS but not HDS, still at 100%). This core restriction of HDS is the main concern to me.

Nick, the caching and stream separation are inter-related. Stream separation is beneficial for storage, but in HTTP storage properties are mirrored in the caches.

In terms of origin caching performance, the solution here is to put nginx in front of Apache. It's not recommended to run a naked origin; this is the same advice you would get if you were running PHP or other dynamic web technology. The general issue described is with FMS’s implementation of HDS. The FMS is a “smart” origin capable of doing dynamic packaging, etc. This is definitely more expensive than a “dumb” origin approach, but has lots of advantages, too. It is certainly possible to implement HDS using a dumb origin; Adobe just doesn’t ship such an implementation.

Also – Jens I couldn't for the life of me find specs for f4x files? f4m, f4v sure, but not the server manifest f4x. Are they available?

F4X is just a detail of the FMS implementation, and therefore doesn't require a spec. It's a box with global entries for all F4F fragments.

Great post! However, this only covers container/transport format differences between HDS and HLS. Do you plan to cover the scheme differences between the two: bootstrap approach versus sequence number approach. Performance and scalability aspects, ad splicing,..

Yes, there will be more coverage coming soon, specifically around advertisement.

Nice article.

This is a really good read for me, Must admit that you are one of the best bloggers I ever saw.Thanks for posting this informative article.

Thanks for the post. But I agree with Nick.

Plus : Stream separation is not a good news for caching. It creates fragmentation and increase request rates which are the 2 critical aspect of delivery and cache efficiency.

PS : 10s segment recommendation is not an HLS constraint. It’s just a good compromise dealing with underlying codec efficiency constraint (H.264 big GOPs = Less Bandwidth for the same quality), reduced fragmentation.

@arnaud yes, based on Apple’s guidance the 10 second fragment size is related to the size for the m3u8 file.

“The main point to consider is that shorter segments result in more frequent refreshes of the index file, which might create unnecessary network overhead for the client. Longer segments will extend the inherent latency of the broadcast and initial startup time. A duration of 10 seconds of media per file seems to strike a reasonable balance for most broadcast content.” – https://developer.apple.com/library/ios/documentation/networkinginternet/conceptual/streamingmediaguide/FrequentlyAskedQuestions/FrequentlyAskedQuestions.html

You can gzip them, which reduces the size, but not the request frequency. Not sure what comment from Nick you are referring to, since he made a couple. The core of this comparison is fmp4 is the more modern format, but HLS is widely deployed. Also the reason why Flash Player is officially supporting HLS in addition to HDS with Adobe Primetime.

Thank you for this informative and detailed analysis.

Hi, Thank you for this interesting article. But I was wondering, when I was reading it through, if you know about the THEOplayer. The THEOplayer is the ONLY HTML5 video player that can guarantee HTTP Live Streaming playback, cross platform, without any plugins suck as Flash or Silverlight. It would be amazing if you can let me know what you think?

You can check it on: https://www.theoplayer.com

I look forward hearing from you!

Currently the blockquote style is so much larger than the normal paragraphs they are quite hard to read.

Good analysis – Coincidentally , if someone is requiring a a form , my business filled out a fillable document here

http://goo.gl/L16mTV