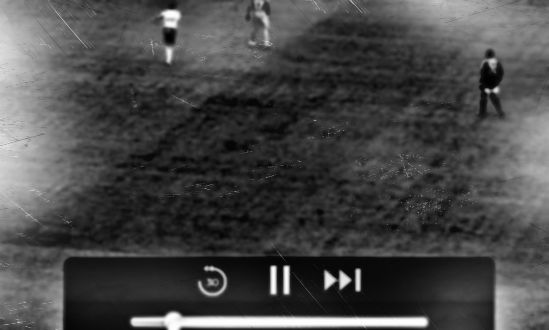

As mentioned in my previous post “The Ultimate Guide to Understanding Advanced Video Delivery with AIR for Mobile“, Adobe AIR can help to significantly reduce fragmentation on devices. Here is another example of an AIR use case that allows to drastically gain reach with minimal investment – live radio streaming.

RTMP is a widely deployed streaming protocol for radio stations. AIR allows to tap into the existing server infrastructure, and stream the content to devices. In this example we are focusing on AAC+ audio streaming to Android devices with RTMP. For other platforms and/or codecs, please refer to the codec/protocol look-up table.

Features relevant to radio live streaming

- Broad device reach, independent from underlying streaming capabilities

- Reduced latency e.g. for live events, news, etc.

- Intelligent reconnect feature to ensure a continuous stream while switching networks

- Advanced metadata for title, artist and album

As explained in the my previous summary, Adobe AIR addresses the first requirement – device fragmentation. Since AIR is supported on Android 2.2 and higher, RTMP with AAC+ works automatically on the majority of all Android devices without customization or device specific testing.

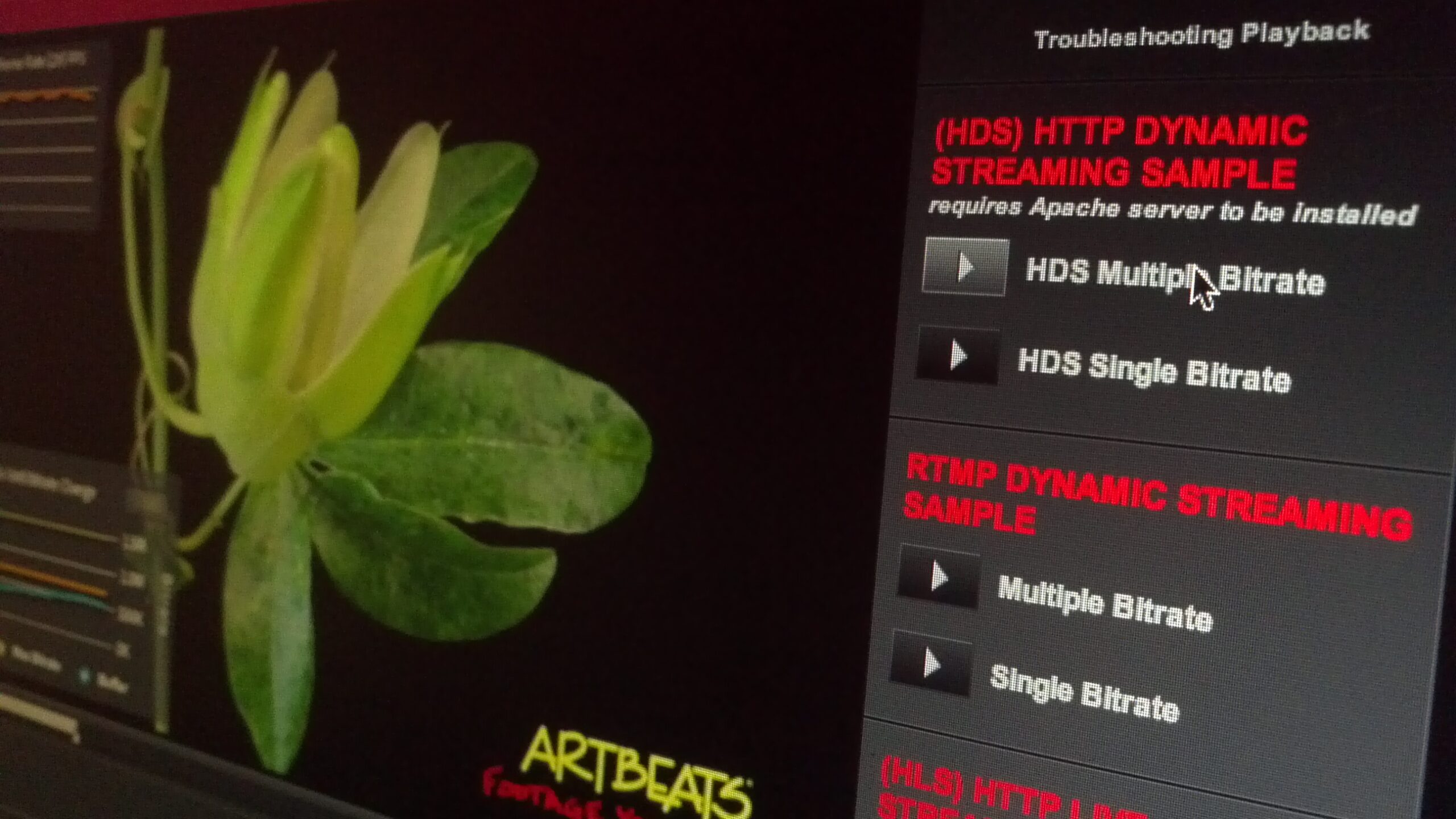

RTMP fulfills the second requirement, very low live latency. The protocol was originally designed for real-time 2-way video communication, therefore has very minimal delay. That said, for live on mobile a buffer is recommended for smooth playback, which is user defined and set to 5 seconds in this example. Of course it’s also possible to switch to an HTTP based delivery approach with HDS, which changes the latency characteristics and reconnect requirements.

RTMP fulfills the second requirement, very low live latency. The protocol was originally designed for real-time 2-way video communication, therefore has very minimal delay. That said, for live on mobile a buffer is recommended for smooth playback, which is user defined and set to 5 seconds in this example. Of course it’s also possible to switch to an HTTP based delivery approach with HDS, which changes the latency characteristics and reconnect requirements.

Next on the list is the reconnect feature, which is specifically important for a protocol like RTMP that maintains a consistent connection. If the connection is lost, or switches between networks, the playback will less likely pause with this feature. The application will try to reconnect while the buffer plays out. If it connects quickly enough, no interruption of the live stream will occur. The reconnect feature is automatically enabled in the latest version of OSMF.

Last but not least, the advanced metadata for title, artist or album. Live metadata is embedded as part of the stream, which is a feature that has been supported for years. Advanced encoders like the audio optimized Orban encoders and others support live metadata.

For the best experience, the encoder should provide data of the current song as part of the metadata sent when subscribing to the stream, and live cuepoints to update the information during playback. Instead of the encoder, an intermediate server can provide this as well, but you can find more information in the live metadata article.

And finally, the complete code:

<?xml version="1.0" encoding="utf-8"?> <s:Application xmlns:fx="http://ns.adobe.com/mxml/2009" xmlns:s="library://ns.adobe.com/flex/spark" backgroundColor="#000000" applicationDPI="160" creationComplete="init()"> <fx:Script> <![CDATA[ import mx.core.UIComponent; import org.osmf.containers.MediaContainer; import org.osmf.elements.AudioElement; import org.osmf.events.MediaElementEvent; import org.osmf.events.MediaPlayerStateChangeEvent; import org.osmf.events.TimelineMetadataEvent; import org.osmf.media.MediaElement; import org.osmf.media.MediaPlayer; import org.osmf.media.MediaPlayerState; import org.osmf.metadata.CuePoint; import org.osmf.metadata.TimelineMetadata; import org.osmf.net.NetClient; import org.osmf.net.StreamType; import org.osmf.net.StreamingURLResource; import org.osmf.traits.LoadTrait; import org.osmf.traits.MediaTraitType; private var container:MediaContainer; private var background:Sprite; private var mediaPlayer:MediaPlayer; private var audioElement:MediaElement; private var bufferTimer:Timer; private function init() : void { // OSMF MediaContainer container = new MediaContainer(); var containerui:UIComponent = new UIComponent(); containerui.addChild(container); addElement(containerui); // Source var resource:StreamingURLResource = new StreamingURLResource("rtmp://myserver.com/live/mystream",StreamType.LIVE); audioElement = new AudioElement(resource); container.addMediaElement(audioElement); // OSMF MediaPlayer mediaPlayer = new MediaPlayer(); mediaPlayer.media = audioElement; mediaPlayer.autoPlay = false; //Define the buffer time mediaPlayer.bufferTime = 5; //Timer to display the buffer bufferTimer = new Timer(200); bufferTimer.addEventListener(TimerEvent.TIMER,bufferUpdate) bufferTimer.start(); // Metadata events audioElement.addEventListener(MediaElementEvent.METADATA_ADD, onMetadataAdd); mediaPlayer.addEventListener(MediaPlayerStateChangeEvent.MEDIA_PLAYER_STATE_CHANGE, onMediaStateChange); } // Add the metadata event handler for the first time metadata when subscribing to the live stream protected function onMediaStateChange(e:MediaPlayerStateChangeEvent): void { switch(e.state) { case MediaPlayerState.READY: var loadTrait:LoadTrait = mediaPlayer.media.getTrait(MediaTraitType.LOAD) as LoadTrait; var netStream:NetStream = loadTrait["netStream"] as NetStream; var netConn:NetConnection = loadTrait["connection"] as NetConnection; NetClient(netStream.client).addHandler("onMetaData", onMetaDataHandler); break; } } protected function onMetaDataHandler(info:Object):void { //Parse the stream metadata information and assign to UI } // Monitor the live cuepoints during playback for updated metadata information private function onMetadataAdd(event:MediaElementEvent):void { if (event.namespaceURL == CuePoint.EMBEDDED_CUEPOINTS_NAMESPACE) { var timelineMetadata:TimelineMetadata = audioElement.getMetadata(CuePoint.EMBEDDED_CUEPOINTS_NAMESPACE) as TimelineMetadata; timelineMetadata.addEventListener(TimelineMetadataEvent.MARKER_TIME_REACHED, onCuePoint); } } private function onCuePoint(event:TimelineMetadataEvent):void { //Parse the stream metadata information and assign to UI } // Display the updated buffer private function bufferUpdate(e:TimerEvent) : void { buffer_txt.text = (Math.floor(mediaPlayer.bufferLength*100)/100).toString(); } // Play and pause buttons private function togglePlayback() : void { if (mediaPlayer.playing) { mediaPlayer.stop(); playbutton.label = "Start Playback"; } else { mediaPlayer.play(); playbutton.label = "Stop Playback"; } } ]]> </fx:Script> <s:VGroup x="0" y="0" width="100%" height="100%"> <s:Label id="buffer_txt" width="30%" height="5%" fontSize="20" text="Buffer" color="#FFFFFF"/> <s:Scroller id="contentScroller" height="75%" width="100%"> <s:Group> <s:Image id="coverimg" x="10" y="130" width="300" height="202"/> <s:Label id="title_txt" x="12" y="70" width="300" height="31" color="#BBBBBB"/> </s:Group> </s:Scroller> <s:Button id="playbutton" width="100%" height="20%" label="Start Playback" click="togglePlayback()" fontSize="20" /> </s:VGroup> </s:Application> |

Conclusion

With a couple of lines of client code it is possible to create a basic, but highly functional, radio application, that allows the use existing work flows and reach millions of Android users without having to worry about fragmentation.

Is this app working ONLY on Android ?

I am testing on win 7 , Air 3.2 Flex SDK 2.6 and it keeps giving me the :

Exception fault: Error: The specified capability is not currently supported at org.osmf.media::MediaPlayer/getTraitOrThrow

error ?

You probably have an old version. Make sure to use the latest OSMF version (http://www.osmf.com), not the one build into Flex (remove the Flex OSMF.swc link and replace it with the latest).

@Jens Loeffler Hi, thank you for replay. That was the first thing I tried of course, removed the one from the Flex SDK and linked newest osmf swc (v1.6).. Didn’t help unfortunately. This should work on windows as well right ? Do I need to have specific codec installed on the system ? What is a good free rtmp url to test this on ?

@slavomirdurej It should work on windows as well, but it’s important to replace my placeholder URL with a proper RTMP URL. Unfortunately I can’t share my source stream, but it was encoded by Orban http://www.orban.com/products/streaming/opticodec-pc1010/

Hello,

I am wondering what version of OSMF was used for this app? I have tried the code with the latest v2.0 and the client cannot be accessed through the loadTrait variable.

@marpies I believe it was OSMF 1.6.1 – let me know if it works. Also make sure to remove the default OSMF.SWC (old version) from the Flex SDK, otherwise it will overwrite any manually included OMSF.SWC.

@Jens Loeffler I have tried both 1.6 and 1.6.1 and I have the same problem, to be more precise the netStream property does not exist for the loadTrait object. The thing is, I am using Flash CS5 so I am putting the SWC file to Common/Configuration/ActionScript 3.0/libs/, which I believe is the correct way as it was mentioned on Adobe sites. There is no any OSMF.swc in that folder by default.

Looking at the source code, LoadTrait object does not have a netStream property, is that correct?

Thank you for help.

@marpies That’s odd – I just tried again, and it seemed to be okay. Maybe it’s the source stream you are using, would you be able to share the URL? (you can do this privately with the contact form on the right).

@Jens Loeffler I am using this URL: http://ice.somafm.com/lush

The stream plays just fine but I cannot retrieve any info about it.

Thank you for trying it out.