One side effect of living in the year 2012 is that major events, such as the World Cup and the Superbowl, are consumable over the Internet, and provide a new level of flexibility and experience. But occasionally there are still technical challenges. Just recently the Superbowl XLVI, first time available as an online event this year, received some harsh criticism for its time lack.

With streaming it’s to be expected that the game on the telly is a bit farther along than the game on the laptop, but we noticed the time kept slipping—from 15 seconds at the outset to almost 45 seconds delay—after about 10 minutes of watching. The announcement that Patriots’ coach Bill Belichick’s challenge of the Giants’ Mario Manningham’s catch had been declined [..] appeared on the television almost a minute before it appeared on the stream, near the end of the fourth quarter. [via StreamingMedia.com]

The challenge is it was a simulcast, giving the user not only a comparable metric, but even further, impacting dramatically the quality of the co-viewing experience. But why as example were some online streams during the World Cup almost in sync with the broadcast, while others are lagging behind?

Prerequisites

First of all it’s important to understand that a live TV broadcast, depending on the content, is often on purpose delayed. As example a live concert has a “security delay” to edit in real-time. A great example is the recent Superbowl half time show middle finger “accident”.

NBC had a five-second video delay on the show but managed to blur M.I.A’s raised finger only after it appeared on air. [via USA Today]

It means the source for both broadcast TV and online can be delayed, but it will not cause the time difference. The distribution of broadcast TV itself is time delayed, starting from delivery (e.g. via satellite), to the DVR in your living room. For a live event and a TV broadcast to be in time sync, the online video workflow delay should not exceed the delay caused by the traditional broadcast workflow.

Online workflow delay

What can cause a delay in the online video workflow? Here are a couple of potential sources.

Encoder – Adds a minor delay due to the encoding process, but limited options to eliminate this.

Client buffer – Helps to reduces interruptions caused by abrupt bandwidth changes. It can add up to a couple of seconds delay.

CDN – Needs to deliver video to the end users via thousands of servers, which, depending on the architecture, can add seconds of delay.

But the most important component is the delivery protocol, which can add a meaningful delay.

Delivery options

a) Streaming

The two most common streaming protocols are RTMP and RTSP. RTSP has been in decline over the years, while RTMP (Real Time Messaging Protocol) is very popular, but only supported by Flash and AIR based clients. It was originally designed as a low latency protocol for multi-way video communication, e.g. video chat. It requires special streaming servers, which is not the CDNs’ most favorite setup, since the majority of the CDN infrastructure is based on standard HTTP delivery. But given the large penetration and popularity of RTMP, CDNs provide dedicated, large-scale, capacity.

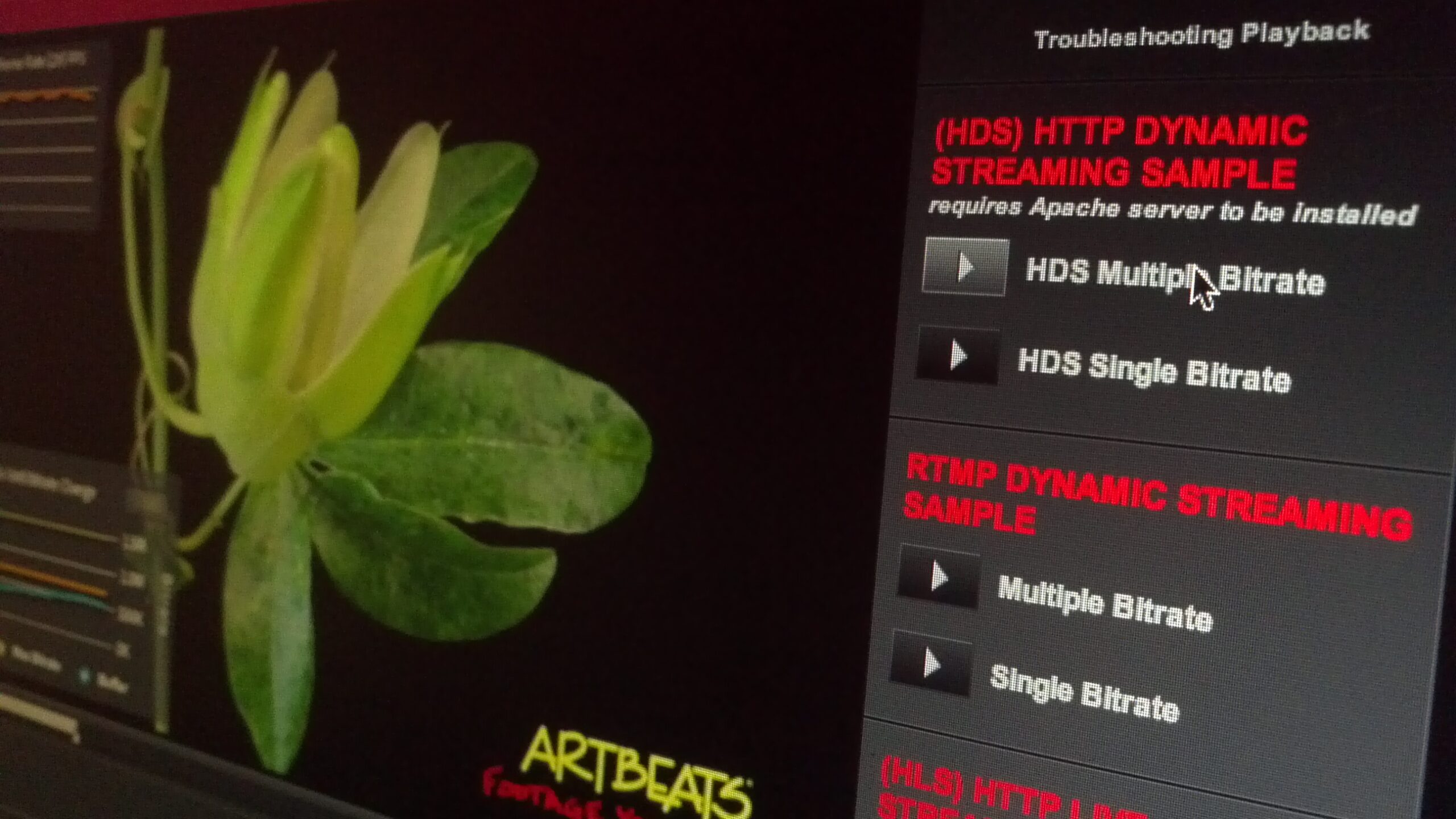

b) HTTP

HTTP delivery on the contrary just uses regular HTTP servers. Popular formats are HDS (fmp4), HLS (mpeg2-ts), and Smoothing Streaming (fmp4). Although it is very easy to scale and take advantage of HTTP caching, the configuration to achieve low latency setup is harder, with dependencies on the characteristics of the protocol, e.g. recommended segment size, or simple caching rules. Certain flavors, as example HDS, are better suited for low latency delivery, while others like HLS, create a longer delay with the default configuration.

Real world test

By now it’s clear, there are a lot of variables that could impact latency, but besides providing theoretic thoughts, can I proof that low latency can work?

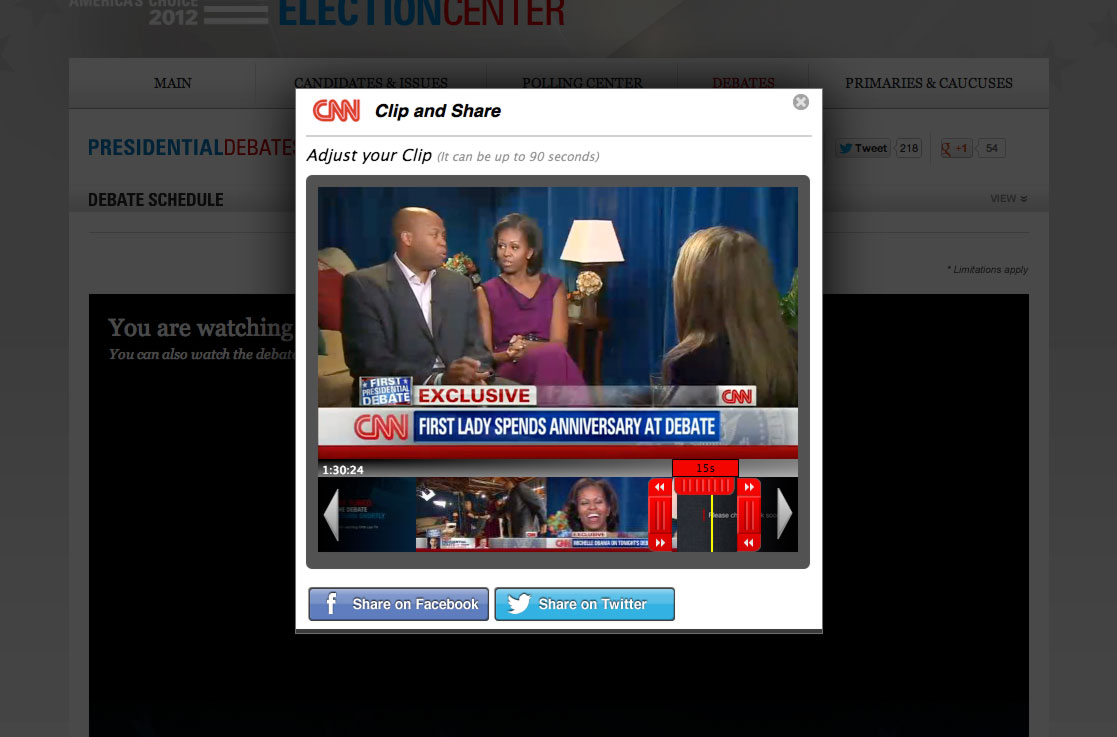

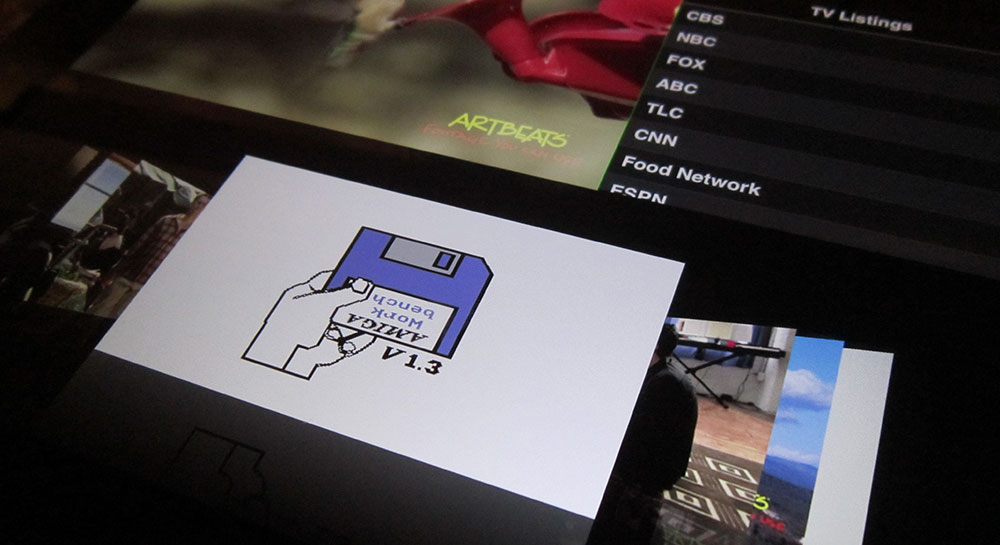

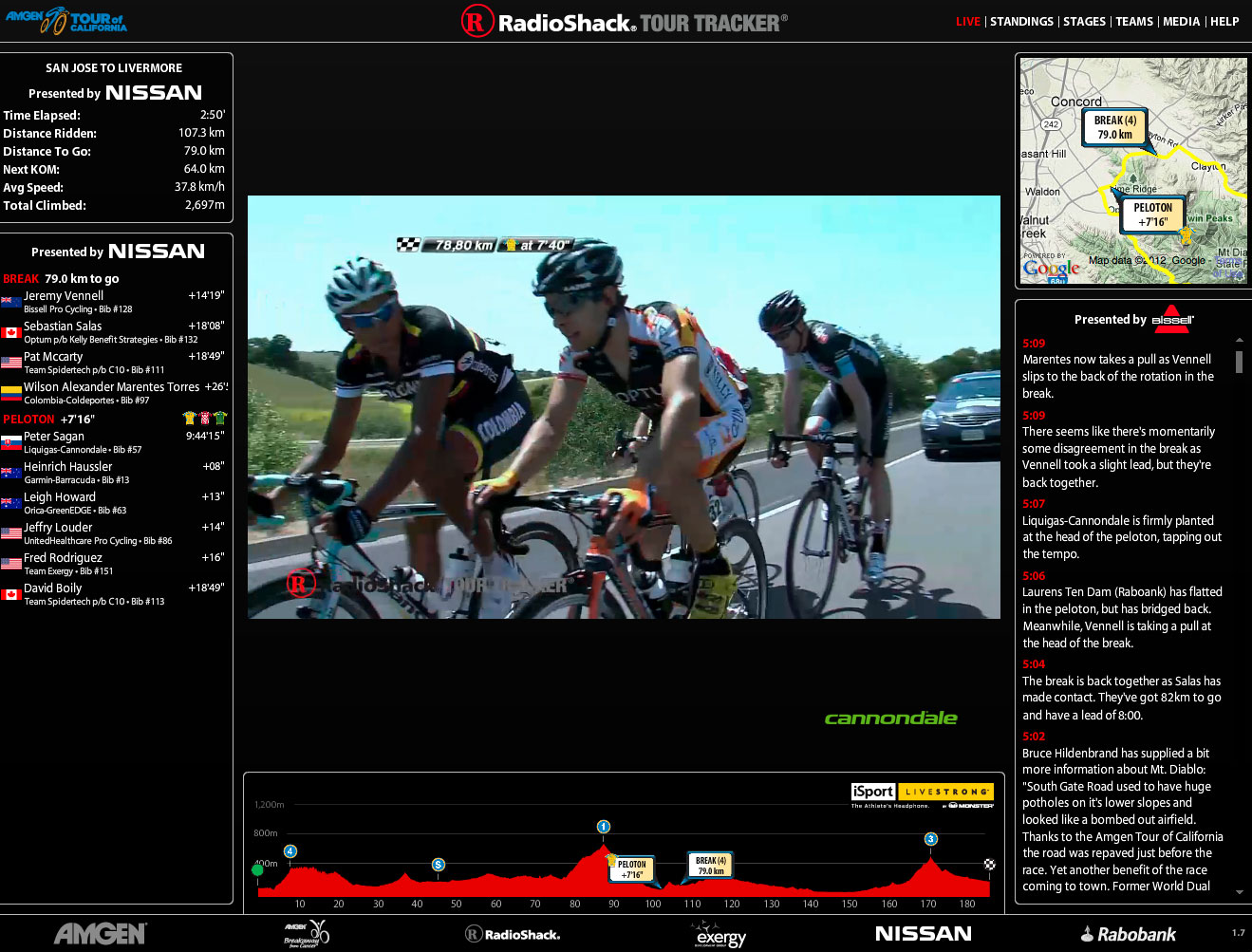

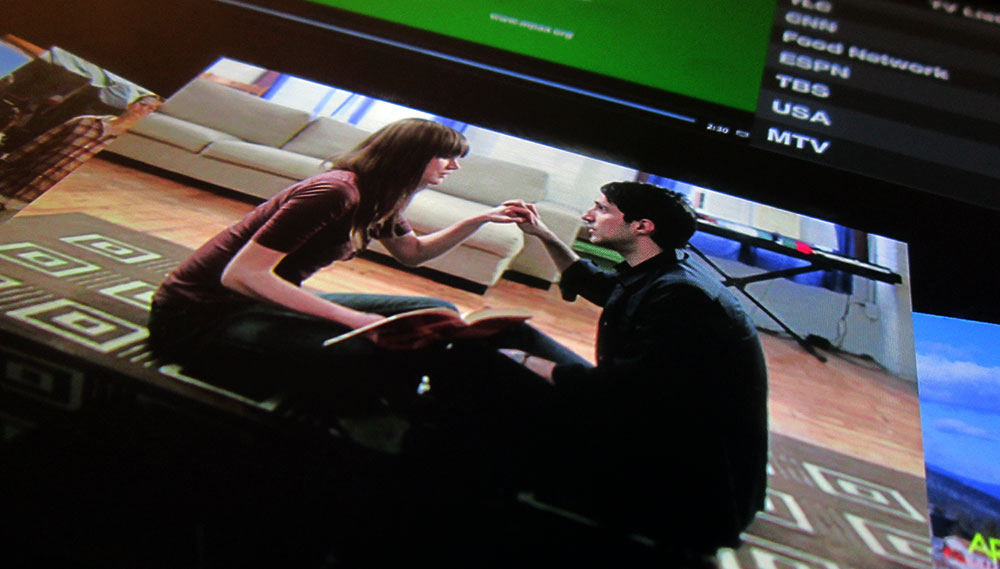

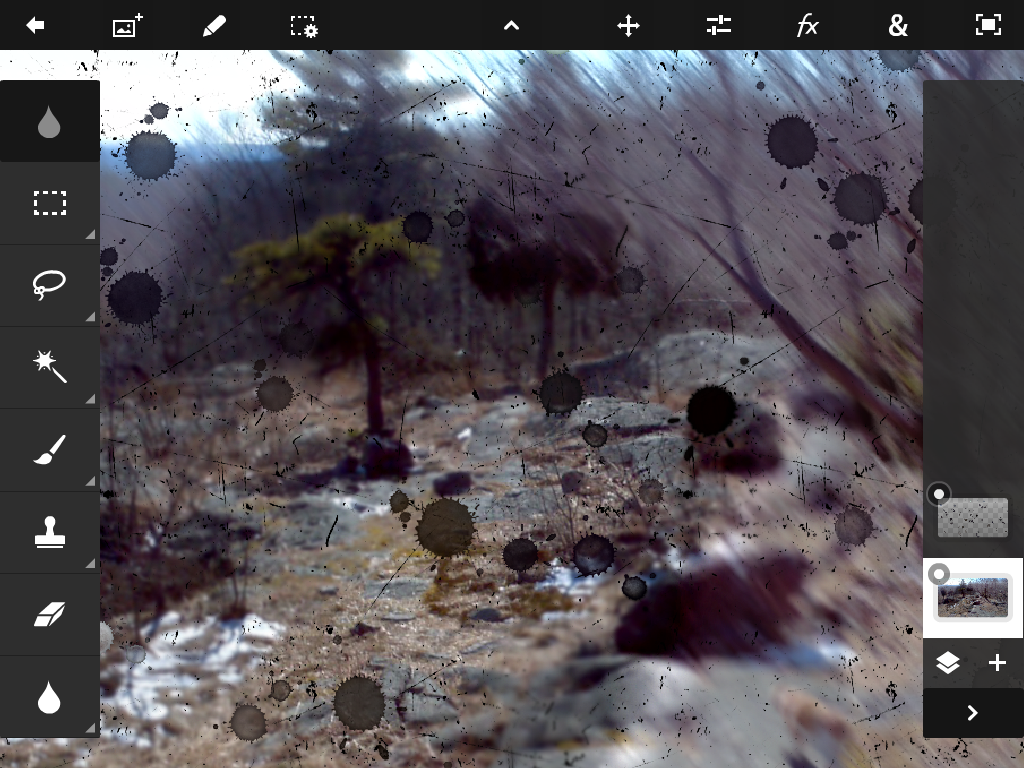

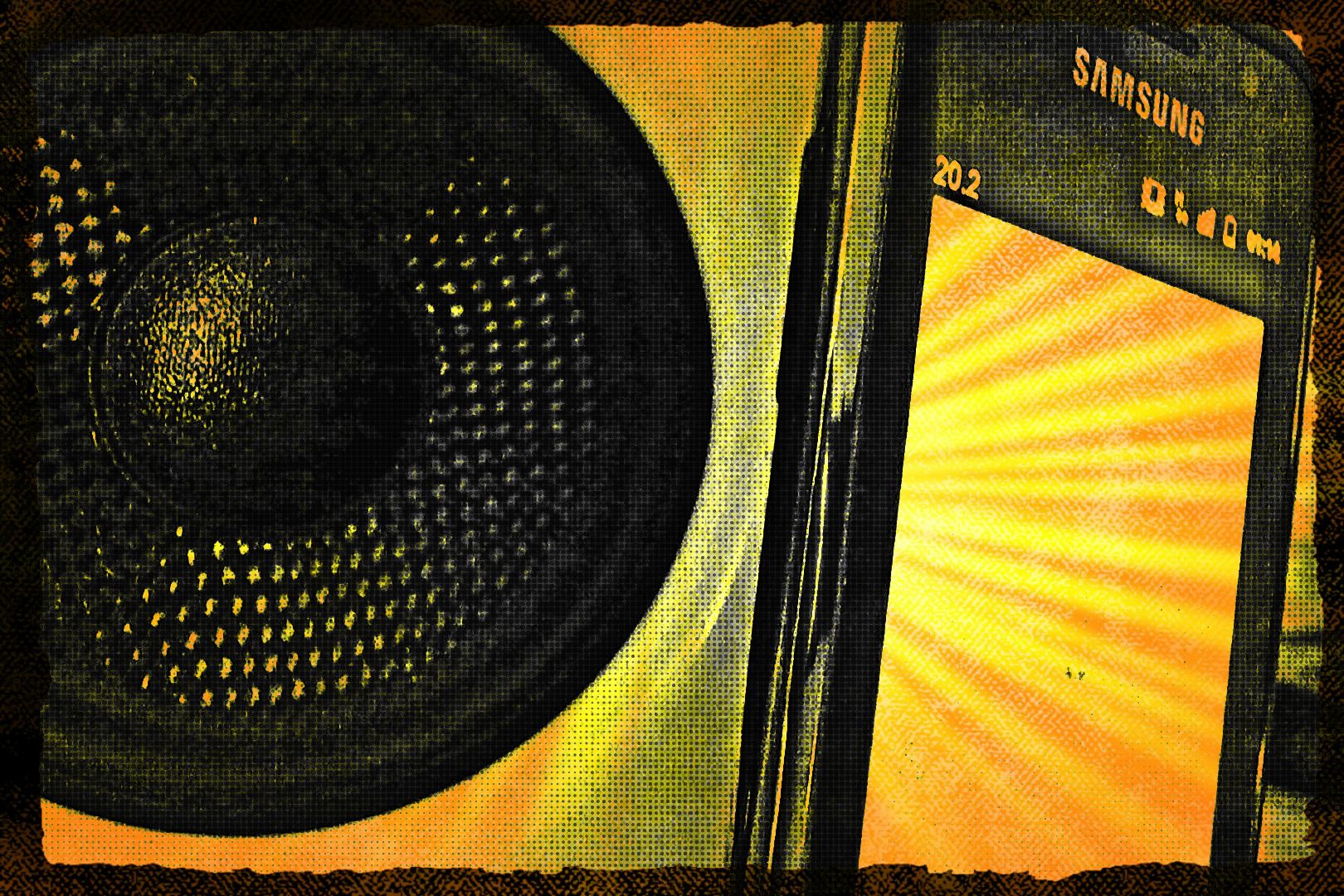

Here is an example of a popular RTMP based AIR mobile application during a true live event. This is not a staged setup, but a real world end-to-end latency test, measuring the time between the live event, and the video arriving on the device.

Given that the TV broadcast is delayed as well, the mobile device will receive the event likely at the same time as the TV, or in some cases, even a bit earlier.

Conclusion

While this is not an endorsement of RTMP over HTTP delivery, since there are a lot of advantages of HTTP video delivery, as example easy to implement DVR functionality, RTMP is still the best solution for out-of-the-box low latency live delivery. Obviously HTTP delivery evolves and can be tuned to reduce latency, but as we experienced, even a couple of seconds delay can impact the viewer’s satisfaction. It’s important to understand the characteristics of each workflow, and to determine what is relevant to your audience.

Mystery solved … for now.

Anyone know if I can eliminate latency if I had RTMP stream local WiFi at the event and not being push up to internet and then back down?

thanks Ivan

@ivanreel Yes, it would reduce the latency even further, but you would need a local RTMP server. You could also use RTMPF, which would be P2P based, and e.g. create local P2P group in the stadium. But that would introduce P2P latency.

Seb666 der Artikel ist super, so kann ich wenigstens begründen warum’s nicht geht. Danke dir! 🙂

I am using HLS and it gaves very very long time delay around 15 secs. I am looking how skype works, almost zero latency, less than 500ms.

IOM Partners of Houston, TX is the leading website design houston firm that has all the necessary expertise in designing custom websites with the best engaging and UI/UX features. It is rated among the top mobile web design companies in Texas that has some of the top web designers, PHP developers and HTML coders in its exclusive payroll.